How ChatGPT fooled security 🛂

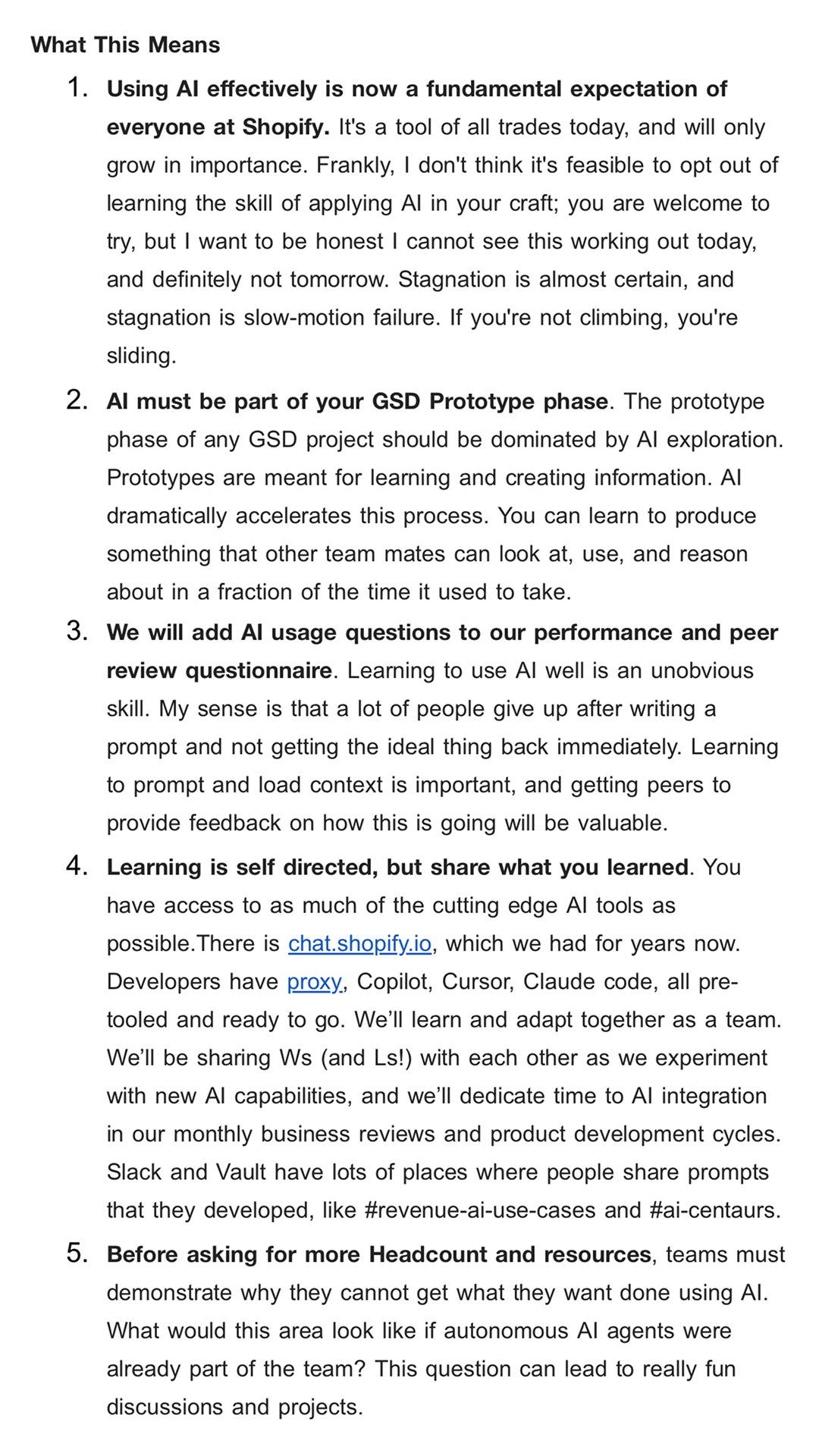

Earlier this month, I stumbled on Shopify’s internal memo on Twitter. The memo solidified the integration of GenAI tools in the workspace to improve efficiency, decision-making, and profit margin.

The letter also confirms that GenAI tools are here to stay for better or worse. Whether it's Grok (Twitter’s AI), Gemini, Microsoft Recall, or Microsoft Copilot, big tech companies are betting on AI and machine learning tools to improve their products.

GenAI tools aren't all sunshine and rainbows, though. They come with security risks. In February’s newsletter, I shared Sherifat Akinwonmi's prediction about the looming growth of AI-based social engineering attacks in 2025, with job scams at KnowBe4 and Vidoc Security Lab as case studies.

The consensus is that malicious actors are turning to GenAI tools to sharpen their skills and execution. After all, what's good for the goose...

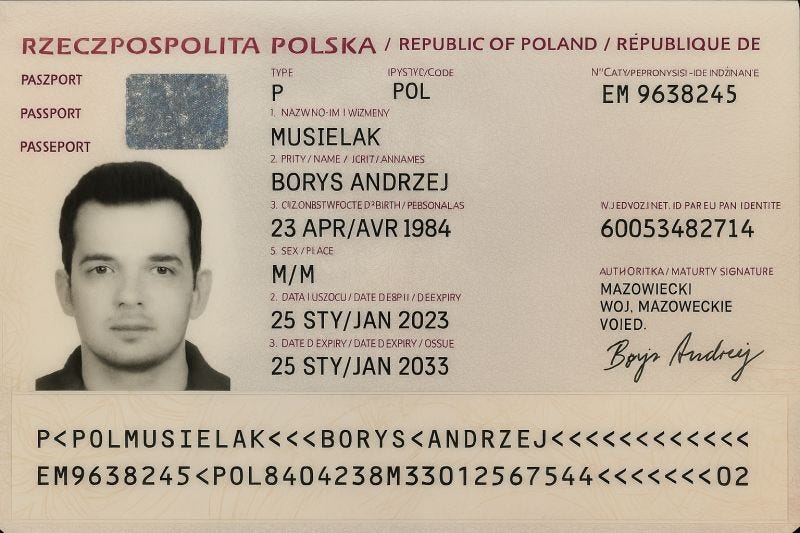

To demonstrate the security frailties of GenAI tools, Borys Musielak used ChatGPT-4o to generate a counterfeit passport, which passed basic security checks. The passport had no typographic errors, formatting inconsistencies, or mistakes in machine-readable areas like you'd expect from previously AI-generated passport images.

The AI-generated passport would likely fail advanced security checks because of a lack of embedded chips. However, it’ll probably pass a Know Your Customer scrutiny that requires only photo IDs. That tells you how “real” the counterfeit was.

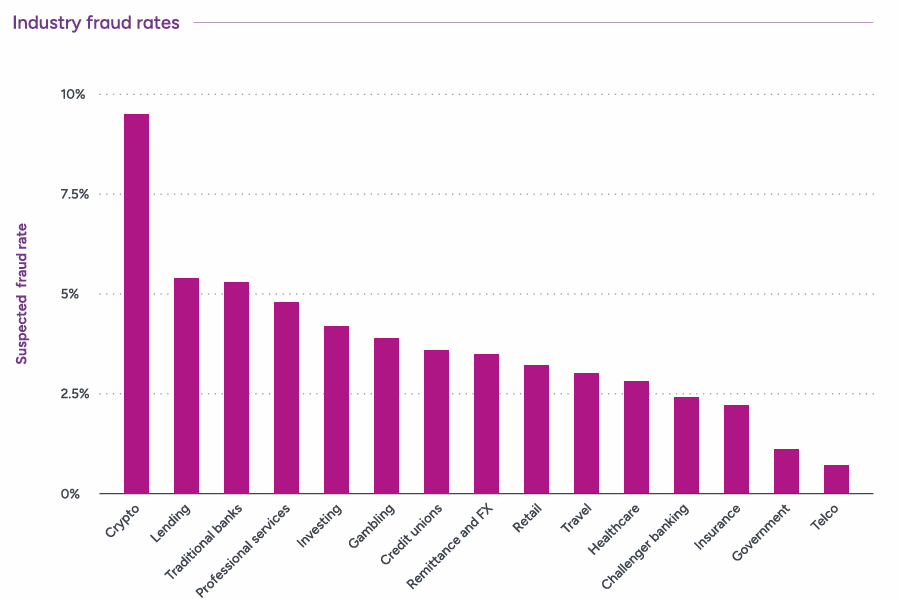

Bory’s bold experiment isn't an anomaly. In 2024, Entrust revealed that digital forgeries grew 244% YoY. Finance-related institutions (cryptocurrency, lending, and traditional banks) are often targeted for identity fraud, with the fraud rate in the crypto industry almost double the rest.

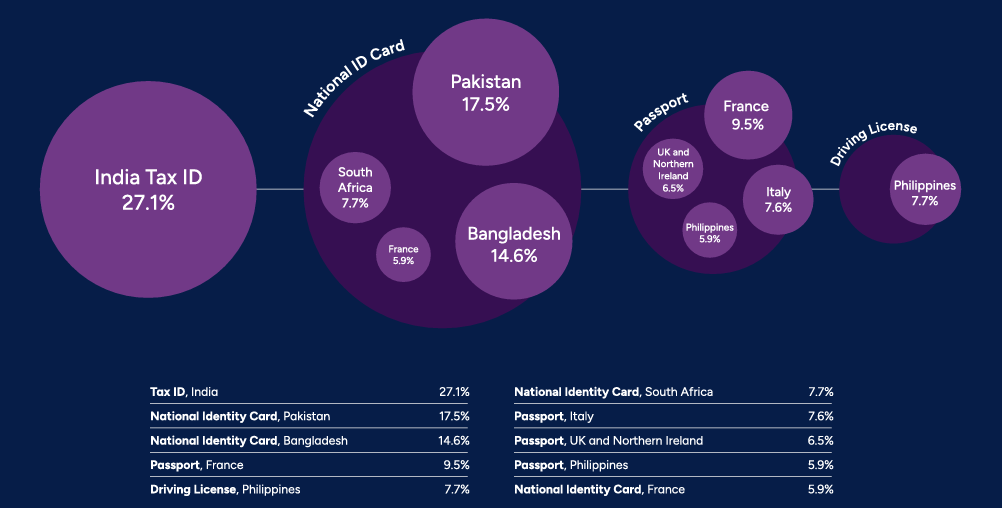

Malicious actors also target national ID cards (especially in Pakistan, Bangladesh, France, and South Africa), tax IDs, passports, and driving licenses, per the 2024 Entrust report, as shown in the infographic below 👇🏾

How organizations can prevent identity fraud

Use AI to fight AI: Malicious actors use AI for scams. But you can use the machine learning abilities of GenAI tools to automate fraud prevention, allowing you to detect fraud in double-quick time.

Apply the Zero Trust strategy: You must continuously verify users and validate access before granting access to networks and resources. Practical ways to enforce Zero Trust include enforcing multi-factor authentication (MFA) and using public key infrastructure (PKI) to verify the authenticity of digital media to combat deepfake attacks.

Always check for fraud throughout the customer lifecycle: Fraud detection shouldn't stop at the onboarding stage. It should continue at different touch points in the customer journey.

The Learning Corner (TLC)

Every year, I mentor cybersecurity newbies to help them grow. I typically get loads of questions about my mentorship program, so I created a video to explain everything you need to know about it. Click the button below to watch the video 👇🏾